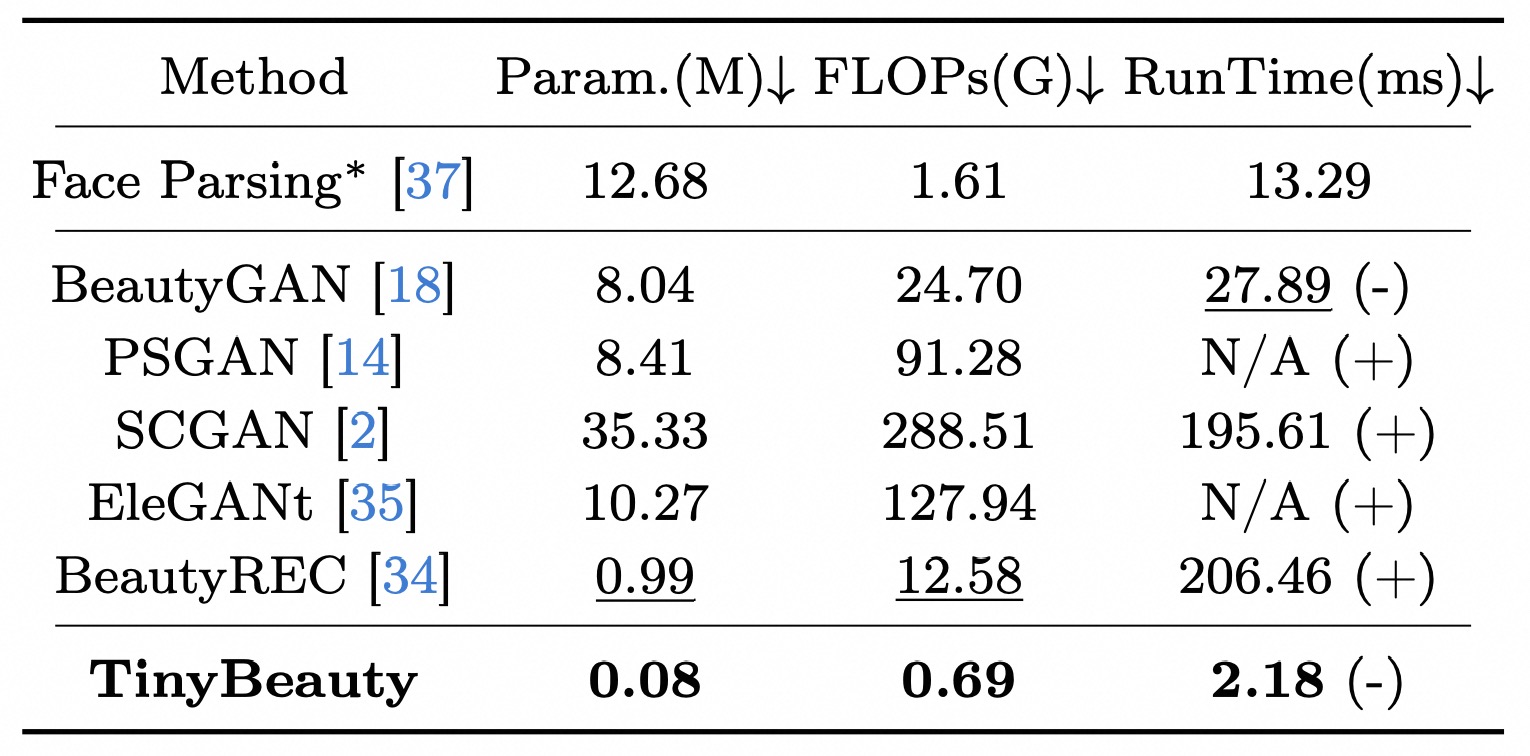

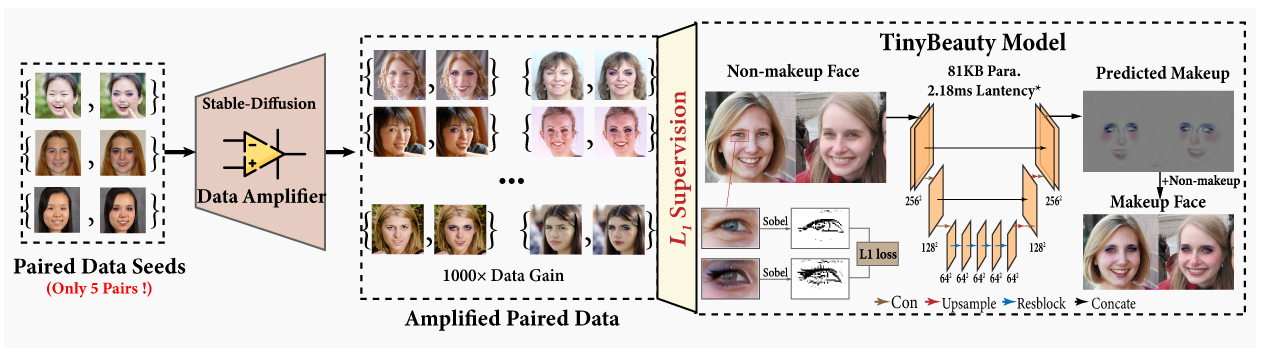

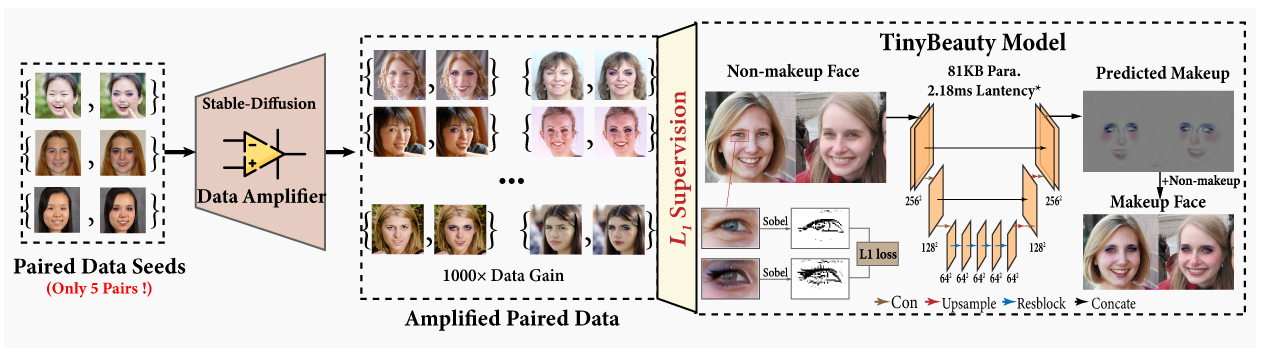

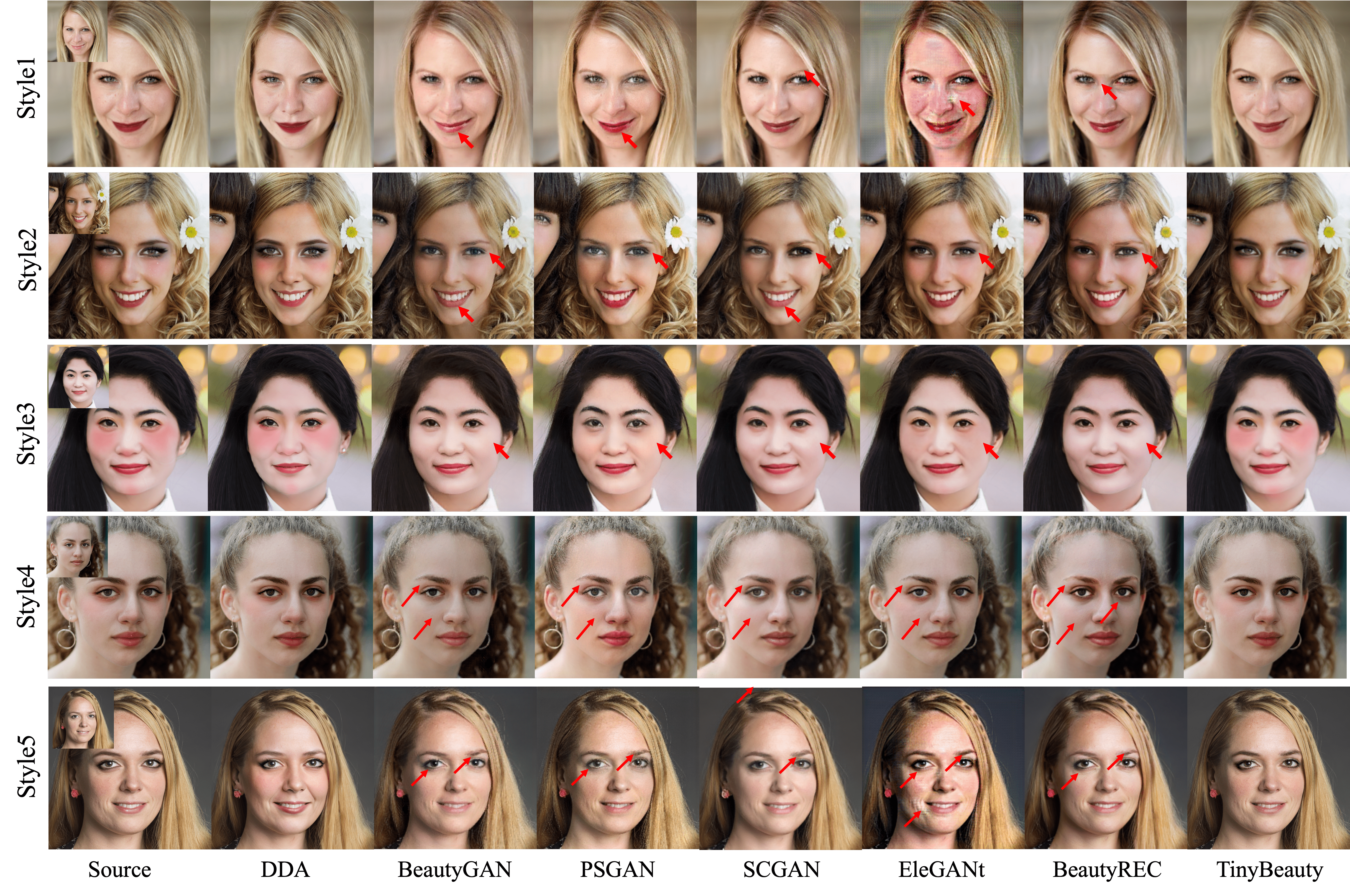

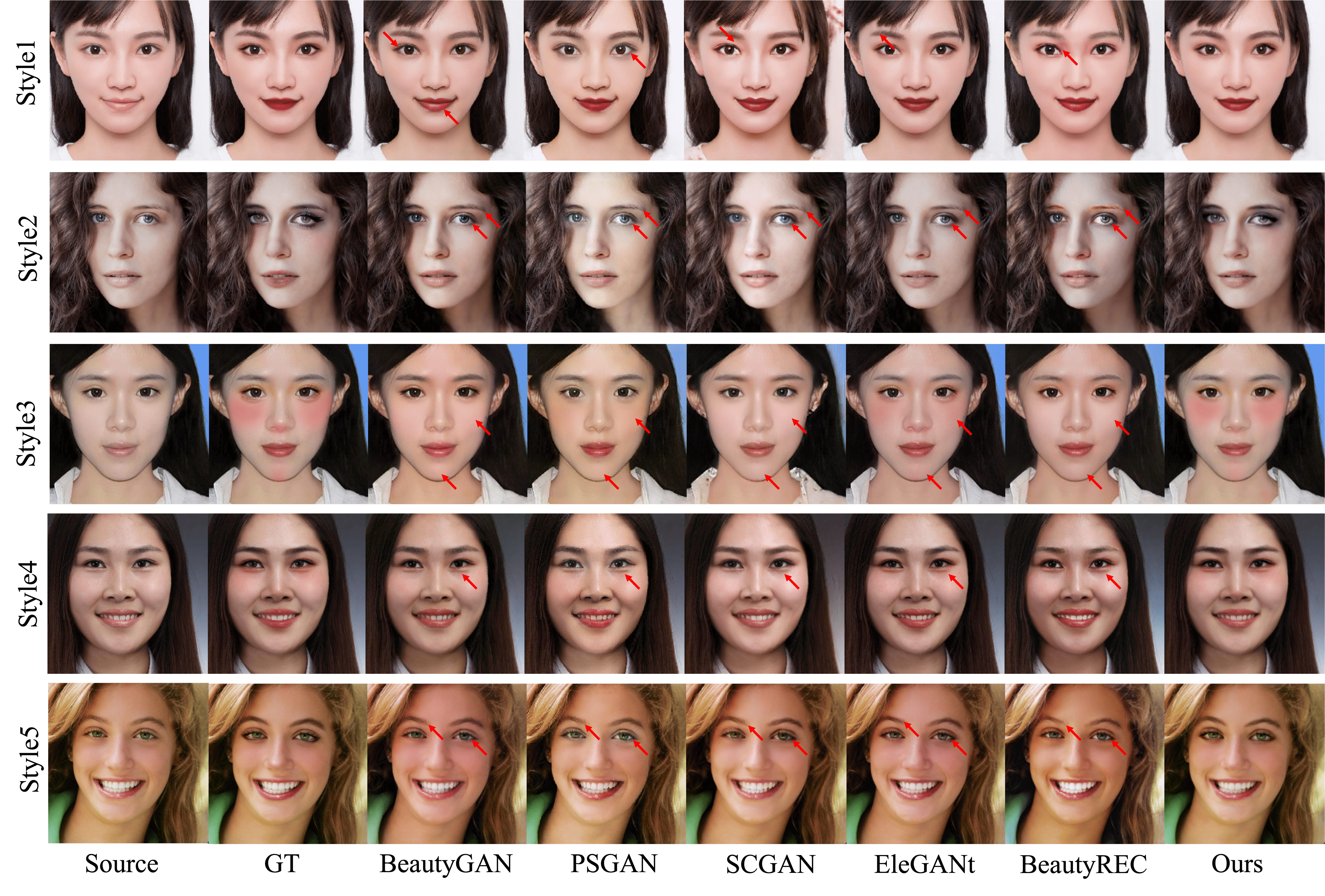

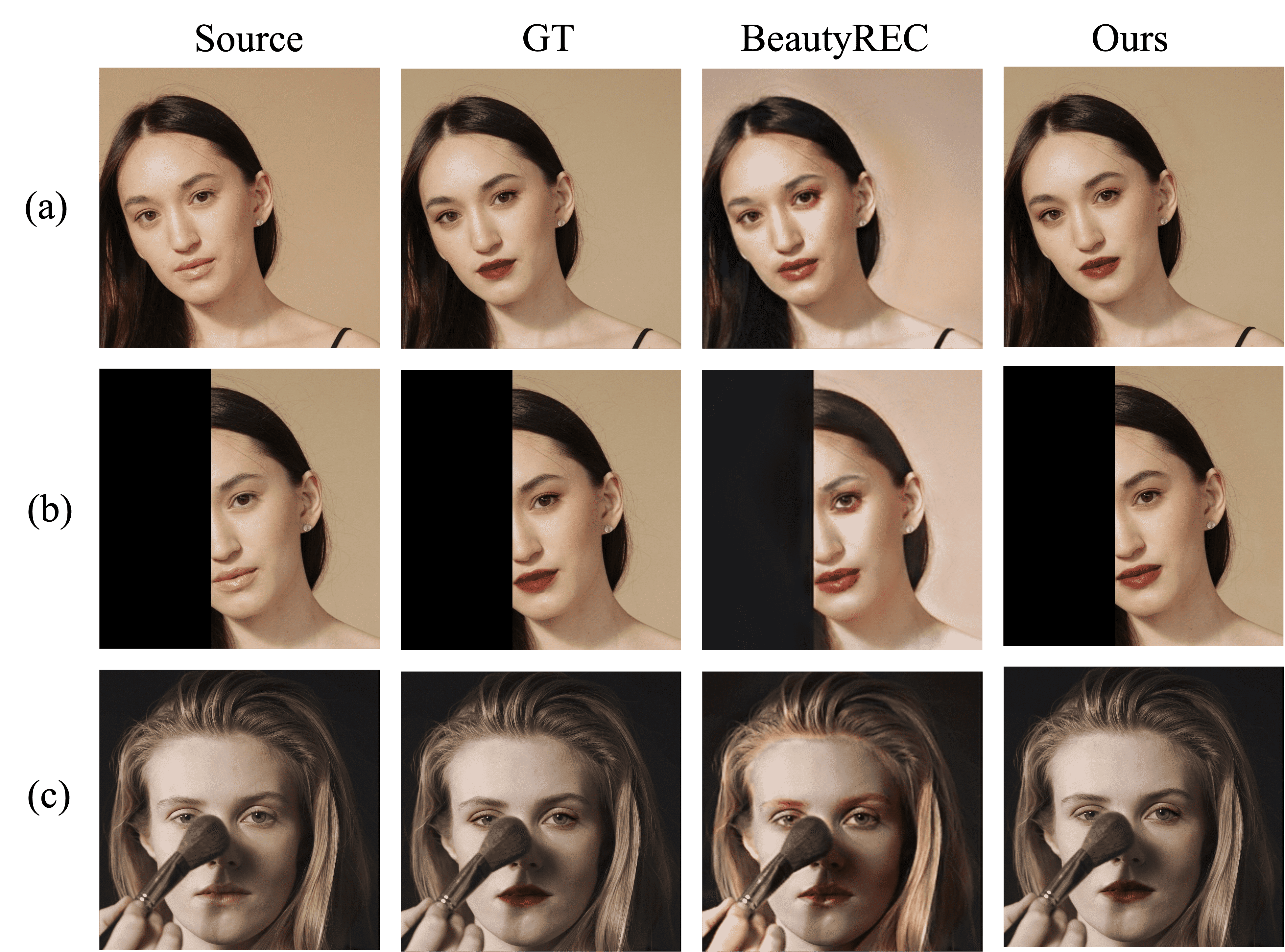

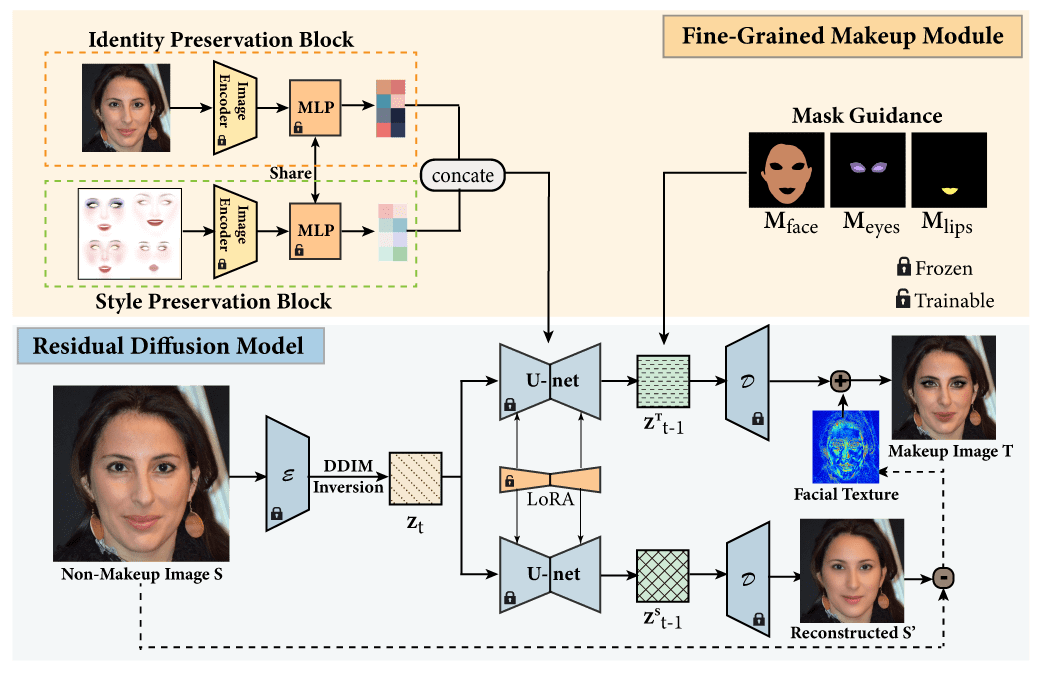

Contemporary makeup approaches primarily hinge on unpaired learning paradigms, yet they grapple with the challenges of inaccurate supervision (e.g., face misalignment) and sophisticated facial prompts (including face parsing, and landmark detection). These challenges prohibit low-cost deployment of facial makeup models, especially on mobile devices. To solve above problems, we propose a brand-new learning paradigm, termed "Data Amplify Learning (DAL)," alongside a compact makeup model named "TinyBeauty." The core idea of DAL lies in employing a Diffusion-based Data Amplifier (DDA) to "amplify" limited images for the model training, thereby enabling accurate pixel-to-pixel supervision with merely a handful of annotations. Two pivotal innovations in DDA facilitate the above training approach: (1) A Residual Diffusion Model (RDM) is designed to generate high-fidelity detail and circumvent the detail vanishing problem in the vanilla diffusion models; (2) A Fine-Grained Makeup Module (FGMM) is proposed to achieve precise makeup control and combination while retaining face identity. Coupled with DAL, TinyBeauty necessitates merely 80K parameters to achieve a state-of-the-art performance without intricate face prompts. Meanwhile, TinyBeauty achieves a remarkable inference speed of up to 460 fps on the iPhone 13. Extensive experiments show that DAL can produce highly competitive makeup models using only 5 image pairs.

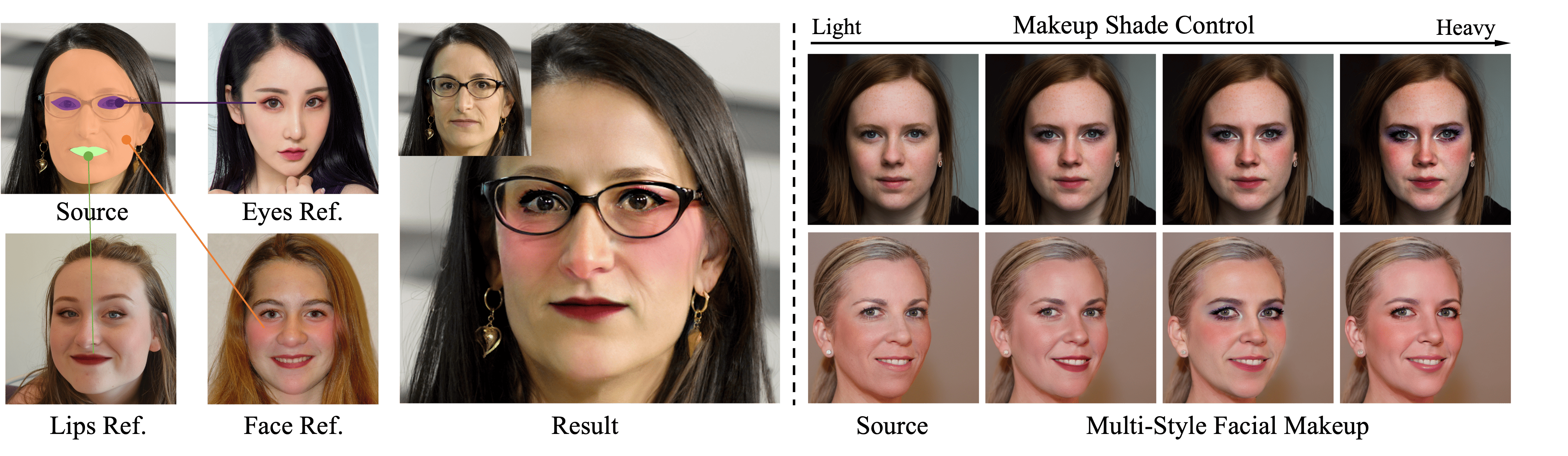

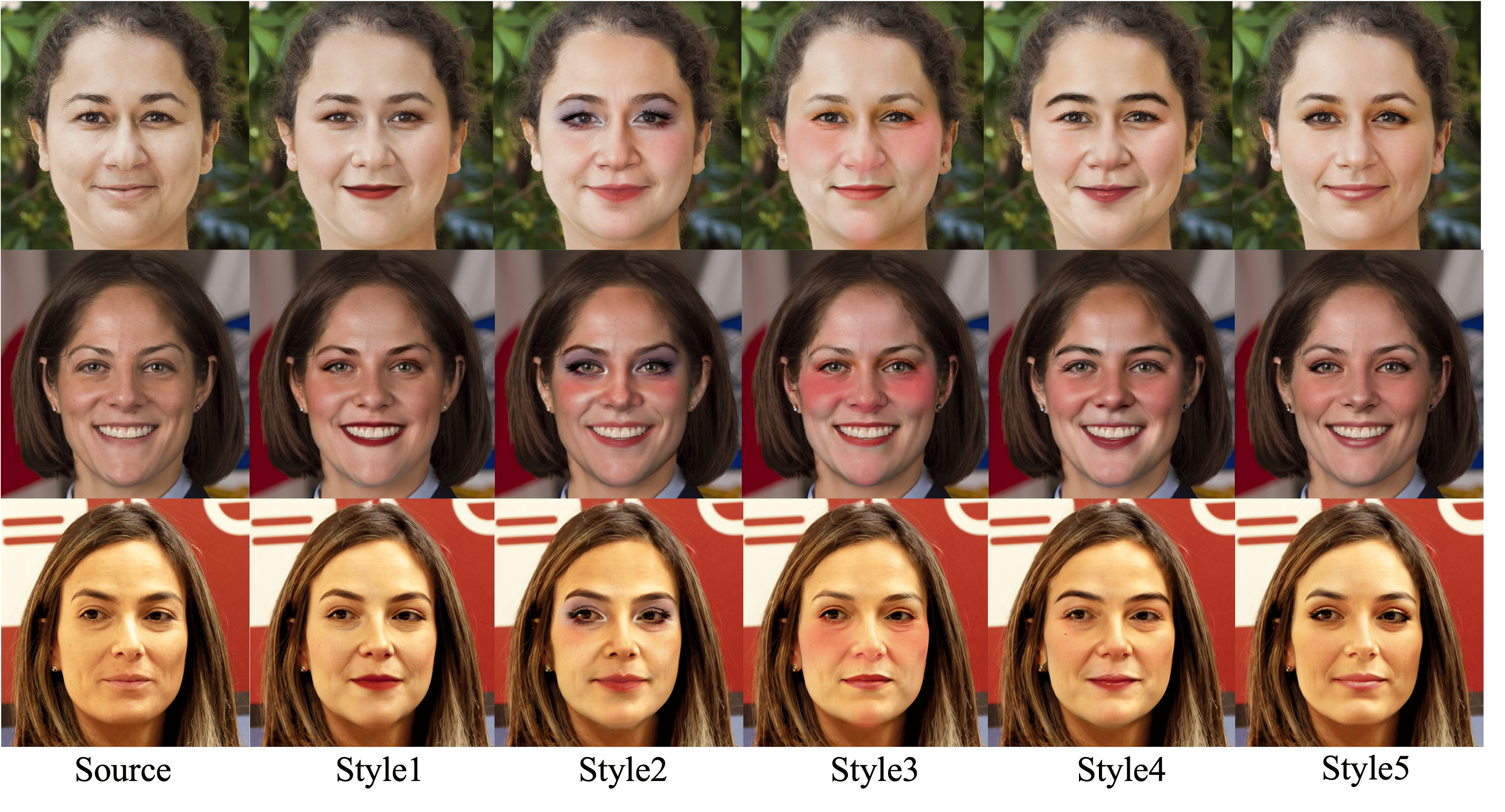

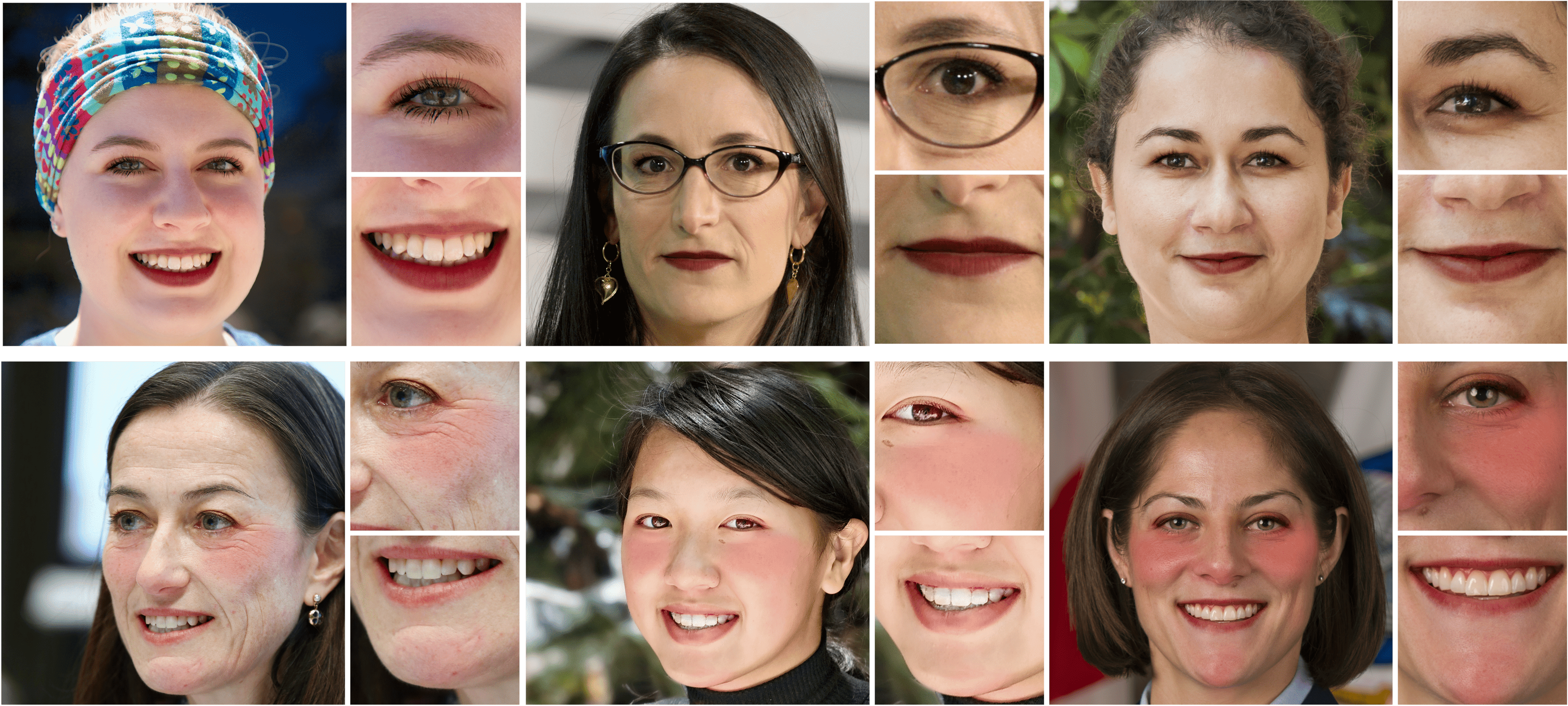

To generate high-quality paired makeup data, our Diffusion-based Data Amplifier(DDA) is required to contain the subject's original facial features, skin texture details, such as wrinkles and spots, and consistent, precise makeup styles across various portraits. To overcome these obstacles, we introduce a Residual Diffusion Model that preserves texture and detail, reducing distortion and mask-like effects. Moreover, we propose a Fine-Grained Makeup Module to ensure the precise application of makeup to the appropriate facial areas and generate visually consistent makeup styles, as the following shows.

Benefiting from DDA-generated paired data, the TinyBeauty Model sidesteps previous laborious pre-processing by directly applying L1 loss aligning generated images closely with their targets, which can be designed as a hardware-friendly network optimized for resource-constrained devices.